Kubernetes is a tool for elegant container orchestration of complex business requirements, such as highly available microservices, and is one of the enabling technologies of the 12-factor application. Initially developed by Google, Kubernetes is currently managed by the Cloud Native Computing Foundation.

In this article, we will talk about what Kubernetes is, why it is a preferred tool for container orchestration, the underlying architecture of Kubernetes, and some Kubernetes objects you are most likely to come across while working with Kubernetes.

- What Is Kubernetes?

- Is Kubernetes Free?

- Kubernetes Platform Overview

- What Is Kubernetes Used For?

- How Does Kubernetes Container Orchestration Work?

- Kubernetes Components

- When You Should Use Kubernetes

- Kubernetes Features and Benefits

- Why Use Kubernetes?

- Final Thoughts

What Is Kubernetes?

Kubernetes is a container orchestration tool used for managing the lifecycle of containers. It improves upon the manual process of deploying and scaling containerized workloads.

We can hardly speak of Kubernetes without mentioning containers. Kubernetes is built upon the foundation of containers. Containers have been of significant benefit to the software development field, specifically in the deployment process. Some of the benefits containers bring to the table are portability, agility, speed, immutability, idempotency and fault isolation.

While containers have become a standard for deployment across several platforms because of the benefits they bring, they also introduce some level of complexity in application workloads. Some of these complexities affect monitoring, data storage, security and orchestration. It is these challenges presented by containers that Kubernetes solves.

There are several container orchestration tools such as Docker Swarm, Google Container Engine, Amazon ECS, Azure Container Service, CoreOS fleet and others, each with their own unique offerings. However, Kubernetes is the most popular and has become the de facto standard for container orchestration. This is largely because it is open source, has many options it offers and has a large community that supports and uses it.

Kubernetes, as the most popular container orchestration platform, plays an integral role in orchestrating many enterprise applications. For those considering Kubernetes, it's good to have a basic understanding of how Kubernetes works, and why it has managed to claim such a large market share. Let's start with a comparison.

Kubernetes Is...NASCAR?

The definition of Kubernetes we listed at the start of the blog is well known in many business technology communities. However, a second statement (while less recognized) is just as important:

Kubernetes is NASCAR.

I should clarify that I don’t mean Kubernetes is NASCAR in the same way that Kubernetes SIG Security co-chair, CNCF Ambassador, and chaotic goose Ian Coldwater means this, which is that there are so many logos vying for eyes in the Cloud Native landscape that it would make even the ghost of Ol’ Dale Earnhart blush.

What I mean by this statement is that the architecture of Kubernetes is all about these steps:

- Go really fast.

- Make a left.

- Do it all over again, at breakneck speed, as fast as possible, not stopping the race for crashes.

So, in essence, Kubernetes is NASCAR.

Is Kubernetes Free?

Kubernetes is a free and open source software derived from Google’s Borg code, with an initial release in June of 2014

Kubernetes is free to use for all organizations, and uses the is released under the Apache 2.0 license. There are many contributors and a plethora of vibrant communities supporting it. As enterprise-grade software build for the enterprise, there’s quite a few players participating and vying for attention in the marketplace (see NASCAR).

Popular Kubernetes Platforms

There are several cloud platforms that provide Kubernetes as a service. These platforms make your work easier by providing you with an interface that makes it easy to deploy applications on Kubernetes without having to worry about setting up and managing the various components of your cluster. Some of them are:

- Amazon EK

- Google Cloud Kubernetes engine

- Digitalocean

- OpenShift

- Azure

An Overview of the Kubernetes Platform

Everything (!) in how Kubernetes is implemented comes down to GoLang goroutines. If you’re not from a team that’s doing Go(lang), you might be more familiar with the now-ubiquitous programming model of Node.JS’s event-loop architecture for web, except goroutines are way more performant.

After much research and photoshop, I am proud to give you the finalized #nodejs System diagram.

https://x.com/RichOnTheWeb/status/494959181871316992

— Totally Radical Richard 🤙 (@TotesRadRichard) July 31, 2014

Why does Kubernetes need a performant concurrency system? Because Kubernetes, as a platform, orchestrates the events required to reach an eventually consistent (read: future) state described in YAML or JSON by the Kubernetes API.

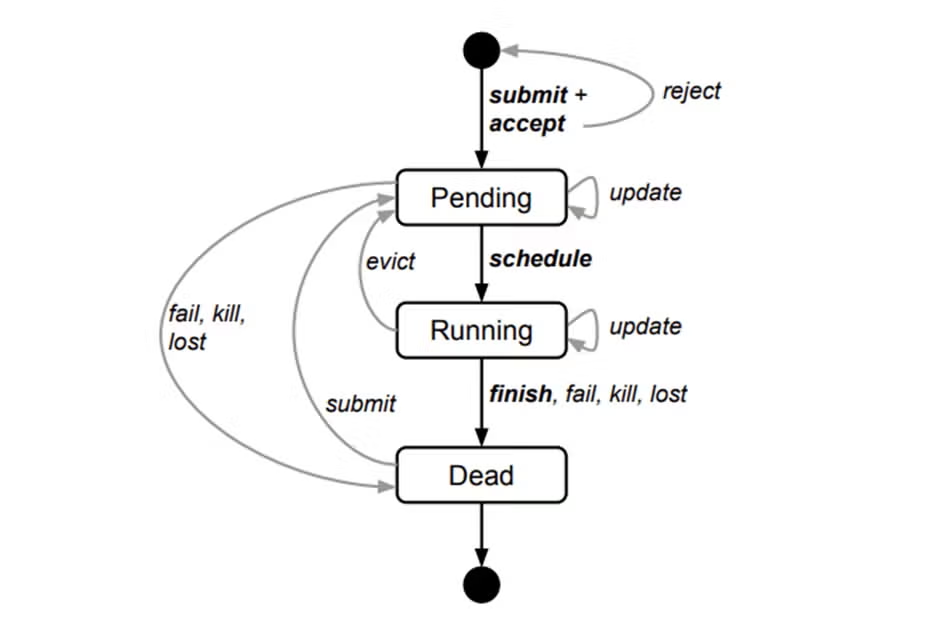

Let’s take a look at the basic unit of Kubernetes, the Pod. Containers are run inside pods, and a pod can have more than one container. Pods in Kubernetes are ephemeral in nature: when they are destroyed all data they generate that is not stored in persistent storage is lost.

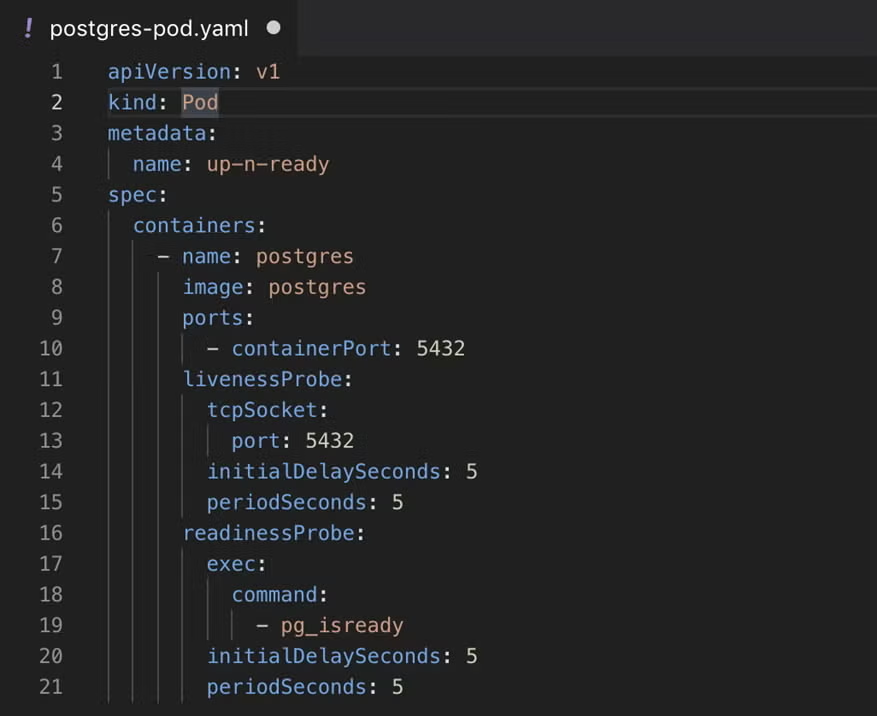

If I send this API object to Kubernetes, the responsibility of Kuberenetes will be to ensure that the state described in the API object is represented in the system. In this case, that there’s a Pod (collection of containers) named ‘postgres’ running the ‘postgres’ image and listening for that traffic on port 5432, checking that the port is listening after 5 seconds, and every five seconds thereafter, and finally running the command ‘pg_isready’ to ensure that, at the application level, postgres is ready to handle requests on that listening port.

Lots of work to do! And that there might be thousands of these pods, and thousands more still of other API objects such as Deployments, ReplicaSets, Persistant Volume Claims, Services, and more? To think that all of this state management can happen on much smaller virtual (!) hosts than you might expect.

The Kubernetes Control Plane () takes care of this at breakneck speed, asynchronously firing off events to other parts of the system, which process these requests in order. If the previously instantiated postgres container crashes (into a barrier wall, to the cheer of the crowd)? The Kubernetes Control Plane immediately (without remorse, or pause for reflection, the trophy in glinting in their eyes) fires off an event to begin working towards rebuilding the state requested.

Kubernetes in one thought? Eventually-consistent orchestration of containers and supporting elements by a fast event loop. In one word? NASCAR.

Kubernetes Objects

Other Kubernetes objects you are likely to use in the deployment process are:

ReplicaSets

ReplicaSets ensure that the correct number of pods are always running at any given time. They keep track of the desired number of pods and the current number of pods running in the cluster. They can be used to deploy pods but the recommended approach is to use deployments.

Deployments

Deployments are used for managing pods. They are preferred to ReplicaSets. Deployments also use ReplicaSets for managing pods. When a deployment is created, it first creates a ReplicaSet which then creates the pods. Deployments have extra functionality and can be used to automatically rollout new changes or rollback to a previous version of your app.

Services

In Kubernetes, a service is used to expose pods. Each pod is assigned its own IP address, and a set of pods are given a single DNS. Traffic coming into pods is load-balanced. There are different types of services, such as LoadBalancer, ClusterIP, and NodePort. When this service is created it is assigned an IP address, and every other object that wants to communicate with pods this service is attached to can use the IP address of that service.

Ingress

Ingress helps to manage external access to services in the cluster. An ingress controller is installed in the cluster, and then an ingress rule is set up for a particular service(s). Using these rules, the ingress controller will automatically redirect traffic to specific services based on the paths specified.

Secrets

Secrets are used for storing and managing sensitive data that are to be made accessible to pods running in the cluster. The data stored in secrets are usually small and in text format.

Other Kubernetes Objects

There are several other Kubernetes objects such as configmaps, daemonsets, namespaces and even custom resources. The ones mentioned here are the ones you will most likely interact with when deploying simple applications in Kubernetes.

What Is Kubernetes Used For?

Kubernetes is used for orchestrating server resources to be highly available and up to date. It is, after all, cluster management software at it’s core.

However, the original intention behind Kubernetes was to fulfil large organizations' desires to implement concepts like DevOps and the 12-Factor Application without having to re-invent the wheel. It’s an open source standard for accomplishing the architectural patterns required to do both DevOps and 12-Factor.

How Does Kubernetes Container Orchestration Work?

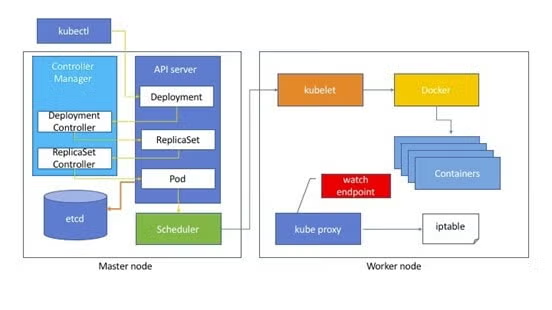

Kubernetes, specifically the Kubernetes Control Plane, is composed of a component services following the event-loop architecture:

- kube-api-server

- kube-controller-manager

- kube-scheduler

- kubelet

- kube-proxy

- kube-dns

- etcd

All together, they’re responsible for ensuring that any API objects we send the kube-api-server get implemented as running containers and component services.

Kubernetes Components

In Kubernetes, applications are deployed to clusters. A cluster is a group of nodes that work together to handle your application workload. Clusters consist of the master and worker nodes. It can have one or more master nodes and zero or more worker nodes. Clustering helps in fault tolerance. For example, you can have multiple nodes on the same cluster running the same containers. If one of the nodes fails, the other nodes can handle the traffic coming into the application.

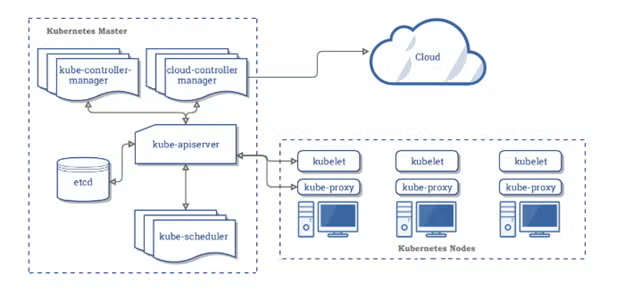

The master node is the node that controls and manages the worker nodes. For example, it is responsible for scheduling and also detecting/responding to events on the cluster. The components of the master node make up the control plane of the cluster.

Control Plane Components

- Kube-control-manager: Kubernetes uses controllers to manage the state of applications in the cluster. These controllers work by comparing the current state of the cluster components to the defined specification. They ensure that the current state and the desired state are consistent with each other. They do this by watching the current state of the cluster stored in etcd through the kube-apiserver and create, update, and delete resources based on changes to the state of either the desired state or the current state.

- Kube-apiserver: It is the communication hub for all cluster components. It exposes the Kubernetes cluster to services outside the cluster via API. It is also the only component that all other master and worker components can directly communicate with. It performs API validation for all resource creation requests. The kubectl command line tool is used to communicate with the kube-apiserver.

- etcd: This is a distributed, highly available, key-value store that is used to store information about pods and nodes deployed to the cluster. It stores the cluster configuration. ETCD only communicates directly with the kube-apiserver.

- Kube-scheduler: The kube scheduler is responsible for assigning unscheduled pods to healthy nodes. To assign a pod, it takes the following requirements into consideration:

- The resource requirement of the pod - cpu, memory etc

- The availability of the resources on the cluster nodes. For example, if there are not available resources on a particular node, the pod will be scheduled on another node with the resources. It will not be scheduled if the resources are not available.

- Pods can also be labeled in the object configuration file so that they have an affinity for certain nodes. For example, you can assign a pod to a node that has GPU available by adding the “GPU” label to the pod definition.

- The nodes can also have certain taints and tolerations that prevent pods from being scheduled on them. A taint is basically like a tag that is added to a node that causes it to repel pods and also allow some. Once a node has been tainted, only a pod with a toleration for that node will be scheduled on that node.

- Kube-control-manager: Kubernetes uses controllers to manage the state of applications in the cluster. These controllers work by comparing the current state of the cluster components to the defined specification. They ensure that the current state and the desired state are consistent with each other. They do this by watching the current state of the cluster stored in etcd through the kube-apiserver and create, update, and delete resources based on changes to the state of either the desired state or the current state.

The kube-control manager is made up of several other controllers. Each of these controllers is a separate process, but they are compiled together and run as a single process to reduce complexity. They are:- Node Controller: monitors and responds to node failure in the cluster.

- Replication controller: responsible for making sure that deployments run the exact number of pod replicas specified in the object configuration document. If a pod dies, another one is brought back up to replace it.

- Service account and token controller: manages the creation of default accounts and API access tokens for new namespaces.

- Other controllers: Other controllers include the endpoint controller, daemonset controller, namespace controller, and deployment controller.

- Cloud-control-manager: Kubernetes uses cloud controllers to provide a consistent standard across different cloud providers. The cloud-control-manager is used to manage controllers that are associated with specific cloud platforms. These controllers abstract resources provided by these cloud platforms and map them to Kubernetes objects.

Node Components

The nodes handle most of the work in the Kubernetes clusters. While the master is responsible for organizing and controlling the nodes, containers are run on the nodes, they also relay information back to the master, and use network proxies to manage access to the containers. The components of the node are:

- Kubelet: The kubelet runs on each node, manages the containers on the node, and talks to the APIServer. When a podspec is passed to the kubelet through the apiserver, the kubelet ensures that the container defined in that podspec is running in a healthy state. Thus the kubelet ensures that the desired state of the containers is maintained.

- Kube-proxy: The kube-proxy is a network proxy that enables the communication between cluster components. It also enables the communication between cluster components and components outside the cluster.

- Container runtime: The container runtime is the software that enables containers to be run in the cluster.

Addons

Addons make use of Kubernetes resources to implement cluster features. If an addon is namespaced, it is placed in the kube-system namespace. Some of these addons are:

- DNS: This is a DNS server that serves DNS records for Kubernetes services. It is compulsory to have a DNS server in your Kubernetes cluster. Containers that are started by Kubernetes add this DNS server to their DNS searches.

- Web-UI dashboard: The web-UI dashboard gives a visual representation of the resources in the Kubernetes cluster. With it, you can configure some of the Kubernetes resources and even view logs of running containers.

- Container resources monitoring: It provides time-series metrics about containers and provides a UI for viewing that data.

- Cluster-level-logging: This stores all container logs in a central store and makes access available via an interface.

When You Should Use Kubernetes

- Multi-cloud architecture: sometimes you may need more than one cloud platform to run your microservices. Some reasons for doing this may be - having access to the different cloud computing solutions or tools provided by these cloud platforms or managing cost. Kubernetes is best suited for this kind of microservice setup.

- Microservice architecture: In the microservice architecture, you split your service into smaller components that interact with each other. In microservices, the smaller components of the entire application are deployed independently and loosely coupled. Kubernetes makes managing microservices very easy.

These microservices are often managed and deployed by different teams or individuals, and it is highly recommended to run them using containers. This is where Kubernetes comes into play as a container orchestration tool. So if you are going to be running a microservice architecture, you should use Kubernetes. - Avoiding cloud and vendor-lock: Vendor or cloud lock is when your application is tied to the services of a particular cloud provider. This forces you to change the structure of your application or how it is being deployed each time you try to change a cloud provider. Kubernetes is vendor-agnostic, so you can easily move your Kubernetes application from one cloud platform to the other using the same deployment configurations. So Kubernetes is the best fit if you are looking for a vendor-agnostic deployment.

- Observability: Kubernetes comes with several tools that enable you to gain insight into the state of your application, resource usage, application logs, and a host of other metrics. If this is part of what you hope to have when you deploy your applications, use Kubernetes.

- High availability: If your application is highly sensitive to downtimes - you do not want your customers to experience downtime, Kubernetes may be your best bet. Because of its automatic rollout, rollback, self-healing, and auto-scaling features, Kubernetes can keep your application running without downtime.

- Slow deployment: If your current application has a slow deployment process, you should consider using Kubernetes. It effectively manages the deployment process and eliminates the error.

- Lower costs: If you are trying to manage costs on your deployed applications, Kubernetes is one of your best options. In a previous section of this article, we discussed the abilities of Kubernetes to scale-up and scale-down according to the needs of your applications. This saves you the cost of having to pay for resources you may not need and ensures that you only pay for what you need and use.

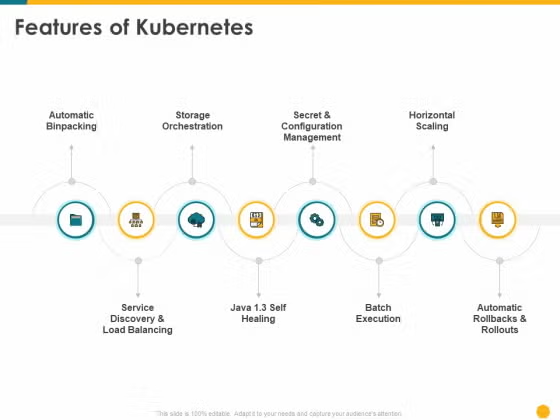

Kubernetes Features and Benefits

- Declarative configuration: Kubernetes lets you describe the state of your cluster declaratively using an object configuration file which is usually written in YAML. For example, in that file, you can define a deployment with 3 replicas, resource requests and limits, containers’ names, and ports to expose. The benefit of this is that:

- It is easy to debug mistakes in your deployment configuration

- You can easily spin up your services on another platform in a matter of minutes

- It eases deployments by CI/CD processes.

- It makes it possible to share configuration among members of a team via an online repository.

- Makes it easy to manage configuration changes.

- Open source: Kubernetes is open source, which saves you cost. Also, because it is open-source, there is a huge community of developers that support it with several other open-source tools to help you manage your deployed applications. For example, we have cert-manager that helps you provision and automatically renew SSL certificates from letsencrypt, Prometheus-Grafana stack that helps you collect data from your cluster and represent that data. We also have the Elasticsearch stack.

- Horizontal scaling: If the nodes (machines) in your cluster are overwhelmed by requests for resources, Kubernetes can provision more nodes (machines) to handle these requests and also take them down when the requests reduce.

- Autoscaling: Just as with horizontal scaling, Kubernetes can also provision new pods (to run containers) to handle traffic as the need arises. When the requests reduce, it also kills the pods to suit the current needs of the system. It does this automatically, and you don’t need to interfere. Just define this in your object configuration file, and Kubernetes will do the rest for you.

- Self-healing: Sometimes things can go wrong with the containers running your application. Maybe they stop service traffic because of an error with one of them. Kubernetes has health checks that help identify when these containers are healthy. If they are not, the pods housing these containers are restarted or killed. New ones are then provisioned immediately to replace the old ones. This prevents your applications from having downtime.

- Load balancing: Kubernetes exposes containers/pods using DNS names. Sometimes, they may be multiple pods running the same application in containers. Kubernetes manages the routing of traffic between these multiple pods. You can also provision a load balancer easily using the Kubernetes LoadBalancer service type and also set up an ingress to route traffic to different services. When there is high traffic to the pods, Kubernetes can also provision new pods and distribute traffic to these pods to create a balance.

- Secrets management: One challenge faced in the deployment process is managing secrets and making sure that you do not expose sensitive information about your application. Kubernetes provides a secure system of storing and updating these secrets without rebuilding your containers or exposing them. The secrets are also easily accessible by your running applications.

- Storage orchestration: Another challenge faced with deployed applications is persistent storage. With Kubernetes, you can easily provision persistent storage and mount it to your running containers.

- Automatic rollouts and rollbacks: In your object configuration file, you can specify the current state of your containers, and Kubernetes will automatically remove the existing (old containers) and rollout new ones based on the specification. You can also rollback to previous versions of your deployed applications in case there is an error with the newly deployed one.

- Automatic bin packing: Kubernetes can schedule pods on specific nodes based on their required resources and other restrictions defined for them. For example, a particular container may require a machine that has a certain CPU or Memory capacity. Kubernetes will only schedule those pods on the nodes that have such resources. Also, if a particular machine has insufficient resources to run a particular pod, Kubernetes can schedule the pod on another node that can support it.

Cost management: Because of its auto-scaling capacity, Kubernetes helps in saving costs. For example, imagine you run a business, and at festive seasons the traffic to your website increases. This means that you may need to get more processing power (machines or CPU or Memory). Before Kubernetes, it may mean that you have to purchase new hardware. If you are using a cloud platform, you may need to manually provision new resources for your machines. This may increase the chances of introducing errors since you have to configure your machines and set up load-balancing or be a waste of resources since you may not need the resources you purchase anymore.

Kubernetes creates these resources on-demand and destroys them immediately there is no need for them. It eliminates errors since the state of your resources has already been defined in your object configuration file and it is done automatically. It also saves you cost since you do not need to purchase resources you do not need at a later time.

- Resource allocation: In Kubernetes, you can specify the resources that your different containers should request and the limits of their consumption. This way, you can properly utilize the resources that are available on your machines.

Why Use Kubernetes?

If you saw the list above, and you're still asking yourself why you might want to use Kubernetes, ask yourself instead how you’re supposed to achieve all of this at once:

Goal | Kubernetes Feature |

|---|---|

Dump the big-iron mainframe! | Runs on commodity hardware in any public or private cloud |

Reduce cloud costs! | Improves elasticity of resources |

Keep my CISO happy! | Security quality gates for deployed software, rolling patching of deployments |

Keep my developers happy! | One of the most in-demand technologies and skills |

Keep my QAC team sane! | Enables CI/CD and DevOps practices at scale |

Achieve web-scale! | Horizontal Pod Autoscaling scales with sensors like CPU use, (autoscaling/v1) or whatever you choose (autoscaling/v2beta2) |

Future-proof my storage strategy while capitalizing on existing investments! | Persistent Volume Claims, and auto-provisioning of SAN resources, like Trident for NetApp |

Final Thoughts

Kubernetes is here to stay, and will be relevant for a long, long time. It’s only going to get more important as architectures change to ARM in the datacenter: concurrency is king.

With as many logos on it’s jacket as we’ve shown, it’s going to be very important to a lot of people. Yes, more important than NASCAR.

Get Expert Guidance and Support for Kubernetes

If you're considering Kubernetes for your application, OpenLogic can help plan and support your journey. Talk to an expert today to see how OpenLogic can help.

Additional Resources

Looking for additional reading on Kubernetes, containers, or container orchestration? These resources are worth your time:

- Webinar - Engineering for Microservices Resiliency

- Datasheet - Kubernetes Foundations Service

- Blog - What Is Harbor?

- Blog - Top 3 Reasons to Choose Kubernetes for Microservices

- Blog - How to Create a Kubernetes Cluster

- Blog - Kubernetes Orchestration Overview

- Blog - How to Move a Monolithic Application to Kubernetes

- Blog - Docker + Grafana + InfluxDB: How to Create a Lightweight Monitoring Solution

- Resource - What Is Enterprise Cloud?