Big Data On Demand with MongoDB

MongoDB, with its scale-out architecture, can be great fit for applications that need to handle large amounts, or irregular spurts of data without a large performance overhead.

In this blog, we discuss some of the problems found in applications processing large volumes of data, how MongoDB can solve those problems, and then walk through how to implement MongoDB to solve our hypothetical data issue.

- Problems With High-Volume Data

- MongoDB Concepts

- Implementing MongoDB

- Final Thoughts

- Additional Resources

Problems With High-Volume Data

If you have a high-volume database server in a single data center serving multiple regions with a high throughput requirement, then you might discover the database is not as performant as expected. Users are complaining of long wait times when accessing data, especially users furthest away from the data center. In addition, you are struggling to keep the most recently used data in memory without the database server evicting pages from memory. What if we could bring important data closer to the user and keep infrequently used data further away while concealing where this data is coming from and keeping memory within a manageable threshold?

A Hypothetical Example

Imagine a fictitious use case of tracking the location of 18-wheel trucks for a freight shipping company, Brutus Trucking. The company owns a fleet of 5,000 18-wheelers scattered throughout the United States, and they plan on aggressive growth by adding another 5,000 trucks over the next five years.

The National Highway Traffic Safety Administration (NHTSA) has mandated that each vehicle’s speed and location are reported every six seconds along with fuel and oil levels, autopilot setting, tire pressure readings (of each tire), and dashboard alarms. An Event Data Recorder (EDR) will be used to capture this data. This data will be used for an early warning system to reduce the amount of accidents across the country. All of this data is fed into a database for analytics, and this data must be retained for a period of three years.

There are a number of problems here that need to be addressed:

- Funneling all of this data into one standalone server is obviously going to create a bottleneck. The server as well as the network will be challenged in this scenario.

- Redundancy: Loss of one server creates a single point of failure. Disaster recovery does not exist.

- Memory cannot store an indefinite amount of information.

- Trucks and analytics applications located furthest from the data center incur a significant latency penalty.

A Hypothetical Solution

What if you could partition this data into two or more regions, East and West, and provide redundancy? The data for each truck would be fed into the datacenter in closest proximity. As each piece of data is received, it would be tagged to indicate which specific region or data center it belonged to. For example, an 18-wheeler located in Seattle would send data to the West datacenter and subsequently replicated to the East data center. An analytics application in Miami could still run a report on trucks traveling the West coast using the database server in the East without querying the datacenter in the West and vice versa. This allows devices to write to the closest server and be read from anywhere.

How could we fix this? A solution to this scenario would be a sharded cluster with MongoDB. Sharding allows us to split the data and load across multiple servers, and we can add servers as necessary as the fleet expands. In addition, data from each datacenter is replicated to the other to provide redundancy.

Let’s show how we can accomplish this.

MongoDB Concepts

A few concepts first:

- Server: A server can contain multiple

mongodprocesses belonging to different replica sets. Server andmongodterms are used interchangeably. - Replica set: A collection of servers running

mongodprocesses containing copies of identical data. A replica set preferably is deployed on servers from multiple data centers for redundancy and disaster recovery. Typically, a replica set contains an odd number of servers (usually three), but they can contain more or less depending on the configuration. For this example, our replica sets will have threemongodprocesses. - Primary: One server of this replica can accept inserts and updates of documents. The rest of the servers are designated as secondaries.

- Secondary: A read-only server belonging to a replica set. Secondaries can be promoted to primary automatically. This can be prevented by a configuration change (discussed later).

- Shard: A shard is a subset of data that optionally can be replicated across servers. For instance, a specific field called a shard key in a document is tagged either East or West depending on what server it came in on. A collection of documents that are all tagged East is a shard.

- Query router: A query router is a process that applications connect to for retrieving and updating data. Applications are completely unaware which server(s) the data came from. Query routers run as a

mongosprocess preferably on the same machine querying the database (e.g. application server). - Configuration database: To determine where specific data is, the query routers will reference a configuration database containing metadata. This database is kept up to date by a

mongodprocess and is deployed as a replica set.

Implementing MongoDB For Big Data

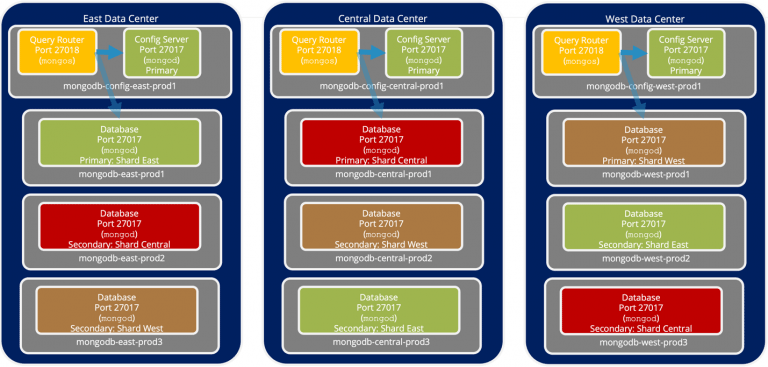

We will install and configure 12 servers in three data centers using MongoDB 4.0 Community Edition on CentOS 7. You might ask “Where am I going to get 12 servers?” I used Vagrant (version 2.2.3) which is an open source virtual software environment allowing you to spawn multiple instances of CentOS. It is available for a number of operating systems and you can download the Vagrant files used in this article.

If you are using git, then you can clone the repository:

git clonehttps://github.com/wcrowell/mongodb-vagrant-demo.git

The instructions will be noted when you can skip a section if you are using Vagrant.d.

A few important notes:

- It is advised to use logical names instead of the actual names of a server in case a server needs to be replaced.

- I would advise keeping ports 27017 (default Mongo port) and 27018 open between data centers.

Here is a list of the machines created (with Vagrant):

- mongodb-config-east-prod1:

mongodprocess running the configuration database and the query router (mongos). - mongodb-config-central-prod1:

mongodprocess running the configuration database and the query router (mongos). - mongodb-config-west-prod1:

mongodprocess running the configuration database and the query router (mongos). - mongodb-east-prod1: The primary

mongodprocess containing shard East. This instance will be writable. - mongodb-east-prod2: The secondary

mongodprocess containing shard Central. This instance will be read-only. - mongodb-east-prod3: The secondary

mongodprocess containing shard West. This instance will be read-only. - mongodb-central-prod1: The primary

mongodprocess containing shard Central. - mongodb-central-prod2: The secondary

mongodprocess containing shard West. This instance will be read-only. - mongodb-central-prod3: The secondary

mongodprocess containing shard East. This instance will be read-only. - mongodb-west-prod1: The primary

mongodprocess containing shard West. - mongodb-west-prod2: The secondary

mongodprocess containing shard East. This instance will be read-only. - mongodb-west-prod3: The secondary

mongodprocess containing shard Central. This instance will be read-only.

The completed environment should look like the following diagram:

Note: Do all operating system instructions as root. mongo specific commands can be done as the user MongoDB runs under (e.g. mongod).

Pre-Installation Setup

Skip to the section, Initiate the configuration database replica set if you decide to use the Vagrantfile, since the Vagrantfile copies these entries into /etc/hosts for you. Add the logic names of each server to DNS or append them to the /etc/hosts file.

sudo vi /etc/hosts

10.10.10.60 mongodb-config-east-prod1

10.10.10.61 mongodb-config-central-prod1

10.10.10.62 mongodb-config-west-prod1

10.10.10.63 mongodb-east-prod1

10.10.10.64 mongodb-east-prod2

10.10.10.65 mongodb-east-prod3

10.10.10.66 mongodb-central-prod1

10.10.10.67 mongodb-central-prod2

10.10.10.68 mongodb-central-prod3

10.10.10.69 mongodb-west-prod1

10.10.10.70 mongodb-west-prod2

10.10.10.71 mongodb-west-prod3

Note: The IP addresses/subnets can be changed according to your environment. Install MongoDB 4.0: Note: Refer to the documentation for your specific operating system. Add the MongoDB 4.0 repository: sudo vi /etc/yum.repsdos.d/mongodb-org-4.0.repo Add the following content:

[mongodb-org-4.0]

name=MongoDB Repository

baseurl=https://repo.mongodb.org/yum/redhat/$releasever/mongodb-org/4.0/x86_64/

gpgcheck=1

enabled=1

gpgkey=https://www.mongodb.org/static/pgp/server-4.0.asc

sudo yum install -y mongodb-org

Configuration Database Servers

In this step, we will be configuring the query routers and configuration database servers:

The configuration servers are a three-member replica set that contain information on where data resides at. The query routers connect to the configuration servers to query this metadata. If you are using Vagrant, go into each of the configuration server directories: mongodb-config-east-prod1, mongodb-config-central-prod1, and mongodb-config-west-prod1. Issue the following command: vagrant up You can skip down to the Initiate the configuration database replica set if you are using the Vagrantfiles. 1) On the configuration servers, mongodb-config-east-prod1, mongodb-config-central-prod1, and mongodb-config-west-prod1, change the /etc/mongod.conf file to the following and replace the bindIp to the corresponding name of the machine (e.g. mongodb-config-east-prod1):

systemLog:

destination: file

logAppend: true

path: /var/log/mongodb/mongod.log

storage:

dbPath: /var/lib/mongo

processManagement:

fork: true

pidFilePath: /var/run/mongodb/mongod.pid

net:

port: 27017

bindIp: mongodb-config--prod1

sharding:

clusterRole: configsvr

Where region combined with the machine name should match mongodb-config-east-prod1, mongodb-config-central-prod1, or mongodb-config-west-prod1. Note: We are going to use the logical machine name here instead of an IP address. This makes the configuration ephemeral in case a machine goes down. You are more than welcome to use an IP address here. Notice the sharding.clusterRole setting of “configsvr”. This indicates to MongoDB this instance will be a configuration server. Do not start the configuration servers just yet. Next, we need to tell MongoDB the configuration servers are part of a replica set by changing the systemd file. Open /usr/lib/systemd/system/mongod.service. At the end of the Environment value, add “--replSet c1”: Environment="OPTIONS=-f /etc/mongod.conf --replSet c1" By setting --replSet to c1, it means that each configuration database server will be a member of the c1 replica set. You can use another name for the replica set as long as it matches the other configuration database servers. You can also set the user and group the mongod process runs under:

User=mongod

Group=mongod

If you do change the User and Group, then make sure to change the ExecStartPre value as well: ExecStartPre=/usr/bin/chown mongod:mongod /var/run/mongodb After making the changes to /usr/lib/systemd/system/mongod.service, refresh the service: sudo systemctl daemon-reload

Initiate the Configuration Database Replica Set

Start the configuration database servers (mongod) on mongodb-config-east-prod1, mongodb-config-central-prod1, and mongodb-config-west-prod1: sudo service mongod start From any of the mongodb-config-*-prod1 servers: mongo mongodb-config-east-prod1:27017/admin Initiate the cluster from the JavaScript command prompt:

rs.initiate(

{

_id: "c1",

configsvr: true,

members: [

{ _id : 0, host: "mongodb-config-east-prod1:27017" },

{ _id : 1, host: "mongodb-config-central-prod1:27017" },

{ _id : 2, host: "mongodb-config-west-prod1:27017" }

]

}

)

After running the command, the JSON returned should have the following if the command was successful:

…

"ok" : 1

…

Exit from the JavaScript command prompt and open the /var/log/mongod.log. You should see the following statements indicating connectivity to the other members in the replica set:

…

2019-01-28T16:09:39.501+0000 I ASIO [Replication] Connecting to mongodb-config-central-prod1:27017

2019-01-28T16:09:39.520+0000 I REPL [replexec-26] Member mongodb-config-central-prod1:27017 is now in state STARTUP2

2019-01-28T16:09:41.526+0000 I REPL [replexec-26] Member mongodb-config-central-prod1:27017 is now in state SECONDARY

…

2019-01-28T16:09:46.598+0000 I ASIO [Replication] Connecting to mongodb-config-west-prod1:27017

2019-01-28T16:09:46.618+0000 I REPL [replexec-25] Member mongodb-config-west-prod1:27017 is now in state STARTUP2

2019-01-28T16:09:58.144+0000 I REPL [replexec-21] Member mongodb-config-west-prod1:27017 is now in state SECONDARY

…

This ends the section of configuration changes to the config servers. Next, we will configure the query routers that will connect to the config servers.

Query Routers

In this step, we will configure the query routers on the same machines where the config databases reside. Note: Normally, the query routers would be placed on an application server, and the application server’s MongoDB driver would be configured to connect to the query router. The goal is to locate the query routers as close to the querying application as possible. You can skip the following section if you are using the Vagrant files. Continue to Database servers. 1) On the configuration servers, mongodb-config-east-prod1, mongodb-config-central-prod1, and mongodb-config-west-prod1, create a /etc/mongos.conf file and replace the bindIp to the corresponding name of the machine (e.g. mongodb-config-east-prod1):

systemLog:

destination: file

path: "/var/log/mongodb/mongos.log"

logAppend: true

sharding:

configDB: c1/mongodb-config-east-prod1:27017,mongodb-config-central-prod1:27017,mongodb-config-west-prod1:27017

net:

bindIp: mongodb-config--prod1

port: 27018

Where region combined with the machine name should match mongodb-config-east-prod1, mongodb-config-central-prod1, or mongodb-config-west-prod1. Create the following file: /usr/lib/systemd/system/mongos.service

[Unit]

Description=High-performance, schema-free document-oriented database

After=syslog.target

After=network.target

[Service]

User=mongod

Group=mongod

Type=forking

RuntimeDirectory=mongodb

RuntimeDirectoryMode=755

PIDFile=/var/run/mongodb/mongos.pid

ExecStart=/usr/bin/mongos --quiet \

--config /etc/mongos.conf \

--pidfilepath /var/run/mongodb/mongos.pid \

--fork

LimitFSIZE=infinity

LimitCPU=infinity

LimitAS=infinity

LimitNOFILE=64000

LimitNPROC=64000

[Install]

WantedBy=multi-user.target

Run the following command to enable the service: sudo systemctl enable mongos.service

Database Servers

East Shard

We will set up the replica set for the East Shard:

If you are using Vagrant, go into each of the East Shard server directories: mongodb-east-prod1, mongodb-central-prod1, and mongodb-west-prod1 and issue the following command: vagrant up You can skip down to the Initiate the East Shard database replica set if you are using the Vagrantfiles. 1) On mongodb-east-prod1, mongodb-central-prod3, and mongodb-west-prod2 servers, insert the sharding and replication sections (in bold) into the /etc/mongod.conf file:

systemLog:

destination: file

logAppend: true

path: /var/log/mongodb/mongod.log

storage:

dbPath: /var/lib/mongo

processManagement:

fork: true

pidFilePath: /var/run/mongodb/mongod.pid

sharding:

clusterRole: shardsvr

replication:

oplogSizeMB: 10240

replSetName: East

net:

port: 27017

bindIp: mongodb--prod

Where region and X combined with the machine name should match mongodb-east-prod1, mongodb-central-prod3, or mongodb-west-prod2.

Initiate the East Shard Database Replica Set

Start up the mongod service: sudo service mongod start 2) From any of the mongodb-*-prod1 servers:

mongo mongodb-east-prod1:27017/admin

rs.initiate()

rs.add("mongodb-central-prod3:27017")

rs.add("mongodb-west-prod2:27017")

cfg = rs.config()

cfg.members[2].priority = 0

rs.reconfig(cfg)

The following statement prevents a secondary (from another data center) from becoming a primary: cfg.members[2].priority = 0 Reference: https://docs.mongodb.com/manual/tutorial/configure-secondary-only-replica-set-member/#assign-priority-value-of-0

Central Shard

We will set up the replica set for the Central Shard:

If you are using Vagrant, go into each of the Central Shard server directories: mongodb-central-prod1, mongodb-west-prod3, and mongodb-east-prod2, and issue the following command: vagrant up You can skip down to the Initiate the Central Shard database replica set if you are using the Vagrantfiles. 1) On mongodb-central-prod1, mongodb-west-prod3, and mongodb-east-prod2, insert the sharding and replication sections (in bold) into the /etc/mongod.conf file:

systemLog:

destination: file

logAppend: true

path: /var/log/mongodb/mongod.log

storage:

dbPath: /var/lib/mongo

processManagement:

fork: true

pidFilePath: /var/run/mongodb/mongod.pid

sharding:

clusterRole: shardsvr

replication:

oplogSizeMB: 10240

replSetName: Central

net:

port: 27017

bindIp: mongodb--prod

Where region and X combined with the machine name should match mongodb-central-prod1, mongodb-west-prod3, or mongodb-east-prod2.

Initiate the Central Shard Database Replica Set

Start up the mongod service: sudo service mongod start 2) From any of the mongodb-config-*-prod1 servers:

mongo mongodb-central-prod1:27017/admin

rs.initiate()

rs.add("mongodb-west-prod3:27017")

rs.add("mongodb-east-prod2:27017")

cfg = rs.config()

cfg.members[2].priority = 0

rs.reconfig(cfg)

West Shard

We will set up the replica set for the last shard, the West:

If you are using Vagrant, go into each of the West Shard server directories: mongodb-west-prod1, mongodb-east-prod3, and mongodb-central-prod2 and issue the following command: vagrant up You can skip down to the Initiate the West Shard database replica set if you are using the Vagrantfiles. 1) On mongodb-west-prod1, mongodb-east-prod3, and mongodb-central-prod2, insert the sharding and replication sections (in bold) into the /etc/mongod.conf file:

systemLog:

destination: file

logAppend: true

path: /var/log/mongodb/mongod.log

storage:

dbPath: /var/lib/mongo

processManagement:

fork: true

pidFilePath: /var/run/mongodb/mongod.pid

sharding:

clusterRole: shardsvr

replication:

oplogSizeMB: 10240

replSetName: West

net:

port: 27017

bindIp: mongodb--prod

Where region and X combined with the machine name should match mongodb-west-prod1, mongodb-east-prod3, or mongodb-central-prod2.

Initiate the West Shard Database Replica Set

Start up the mongod service: sudo service mongod start 2) From any of the mongodb-config-*-prod1 servers:

mongo mongodb-west-prod1:27017/admin

rs.initiate()

rs.add("mongodb-east-prod3:27017")

rs.add("mongodb-central-prod2:27017")

cfg = rs.config()

cfg.members[2].priority = 0

rs.reconfig(cfg)

Shards

Start the query routers (mongos) on mongodb-config-east-prod1, mongodb-config-central-prod1, and mongodb-config-west-prod1: sudo service mongos start From mongodb-config-east-prod1 connect to the query router (mongos): mongo mongodb-config-east-prod1:27018/admin Note: Make sure you are connecting to the query router on port 27018 and not 27017, or you will receive the error: “no such command: 'addShard'”

db.runCommand({addShard:"East/mongodb-east-prod1:27017", name: "East"})

db.runCommand({addShard:"Central/mongodb-central-prod1:27017", name: "Central"})

db.runCommand({addShard:"West/mongodb-west-prod1:27017", name: "West"})

We will create a database named TRUCKS and indexes on a collection called CHECKPOINTS.

use TRUCKS

db.CHECKPOINTS.createIndex(

{ "containerKey" : 1,

"_id" : 1

},

{"name" : "containerKey_1__id_1", background : true}

);

db.CHECKPOINTS.createIndex(

{ "containerKey" : 1,

"eventId" : 1

},

{"name" : "containerKey_1_eventId_1", background : true}

);

use admin

sh.addShardTag("East", "East")

sh.addShardTag("Central", "Central")

sh.addShardTag("West", "West")

sh.enableSharding("TRUCKS")

use TRUCKS

sh.shardCollection("TRUCKS.CHECKPOINTS", { "containerKey" : 1 })

sh.addTagRange("TRUCKS.CHECKPOINTS", { "containerKey": 100 }, { "containerKey" : 200 }, "East")

sh.addTagRange("TRUCKS.CHECKPOINTS", { "containerKey": 200 }, { "containerKey" : 300 }, "Central")

sh.addTagRange("TRUCKS.CHECKPOINTS", { "containerKey": 300 }, { "containerKey" : 400 }, "West")

Exit out of the JavaScript prompt.

Loading Test Data

Download the checkpoints.json. mongoimport --host mongodb-config-east-prod1 --port 27018 --db TRUCKS --collection CHECKPOINTS --file /common/checkpoints.json Get back to a JavaScript prompt with the query router: mongo mongodb-config-east-prod1:27018/admin Verify shard distribution from a mongo> prompt: use TRUCKS db.CHECKPOINTS.getShardDistribution() Since we inserted four documents per each shard, you should see a total of 12 documents equally distributed across the cluster:

Shard East at East/mongodb-central-prod3:27017,mongodb-east-prod1:27017,mongodb-west-prod2:27017

data : 492B docs : 4 chunks : 1

estimated data per chunk : 492B

estimated docs per chunk : 4

Shard West at West/mongodb-central-prod2:27017,mongodb-east-prod3:27017,mongodb-west-prod1:27017

data : 516B docs : 4 chunks : 2

estimated data per chunk : 258B

estimated docs per chunk : 2

Shard Central at Central/mongodb-central-prod1:27017,mongodb-east-prod2:27017,mongodb-west-prod3:27017

data : 492B docs : 4 chunks : 2

estimated data per chunk : 246B

estimated docs per chunk : 2

Totals

data : 1KiB docs : 12 chunks : 5

Shard East contains 32.8% data, 33.33% docs in cluster, avg obj size on shard : 123B

Shard West contains 34.39% data, 33.33% docs in cluster, avg obj size on shard : 129B

Shard Central contains 32.8% data, 33.33% docs in cluster, avg obj size on shard : 123B

You can verify this by connecting to mongodb-east-prod1 and verify only four documents are present: mongo mongodb-east-prod1:27017/TRUCKS Note: If mongodb-east-prod1 is not a primary (e.g. getting NotMasterNoSlaveOk error), then you first must allow the shell to read data by doing the following: db.setSlaveOk() Then get the documents from the CHECKPOINTS collection: db.CHECKPOINTS.find() This should return four documents in the East CHECKPOINTS sharded collection.

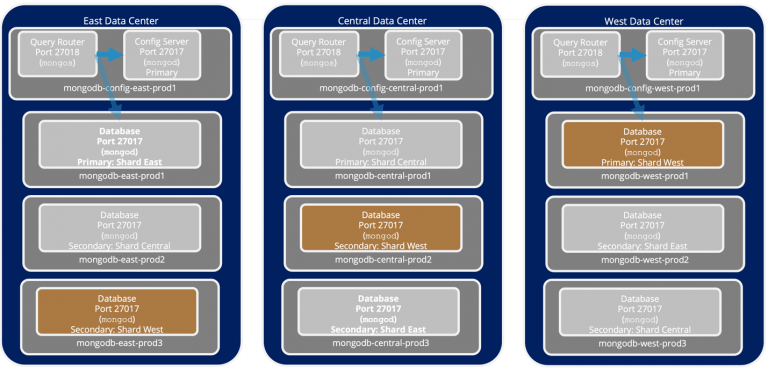

Starting the Cluster

I would start with starting the mongod config database servers first, then the database servers, and finally the query routers. Start the configuration database servers: Issue sudo service mongod start listed in order: mongodb-config-east-prod1, mongodb-config-central-prod1, and mongodb-config-west-prod1 Start the database servers: Issue sudo service mongod start listed in order: East Shard: mongodb-east-prod1 (primary), mongodb-central-prod3 (slave), and mongodb-west-prod2 (slave) Central Shard: mongodb-central-prod1 (primary), mongodb-west-prod3 (slave), and mongodb-east-prod2 (slave) West Shard: mongodb-west-prod1 (primary), mongodb-east-prod3 (slave), and mongodb-central-prod2 (slave) Finally, start the query routers (mongos) on mongodb-config-east-prod1, mongodb-config-central-prod1, and mongodb-config-west-prod1: service mongos start

Final Thoughts

For large-scale applications handling a lot of data, MongoDB can provide the means to elegantly handle that data without the performance issues present in other database types.

It's also important to note that MongoDB isn't a silver bullet for all data needs within applications. For applications that need ACID transactions, relational databases are still a necessity.

Additional Resources

Looking for additional reading regarding open source databases like MongoDB? Here are a few of our favorites:

- Blog - RDBMS vs. NoSQL

- Blog - Cassandra vs. MongoDB

- Blog - Top 3 Open Source Databases

- Support - OpenLogic Supported Open Source Databases

- Resource Collection - Intro to Open Source Databases

Get Support and Guidance for Your Open Source

Need support for your MongoDB implementation? Our OpenLogic open source support team is available 24×7 to assist you with this and other open source software!