ActiveMQ Persistence: Connection Pooling with c3p0 and JDBC

ActiveMQ supports both persistent and non-persistence delivery. Here, we explain ActiveMQ persistence using c3p0 connection pooling with JDBC.

Why ActiveMQ Persistence Is Important

ActiveMQ persistence is important to ensure that a message survives if a broker restarts.

This blog will walk through the steps necessary to create such a configuration. You'll learn the benefits of using connection pooling with your JDBC persistence configuration in ActiveMQ.

Back to topWhy Use JDBC For Database Connection Pooling?

JDBC allows you to create a connection pool.

Even with connection pooling, JDBC is not likely to ever be as fast as the traditional KahaDB journaling database, as you’ll see below. KahaDB is a NoSQL-style data store, with an append-only write mechanism, and it is not held back by things like data relations and spindle contention. There are other ways besides pooling to speed up JDBC, such as load balancing across a cluster of database instances, but even with these enhancements you are likely to see better performance in KahaDB.

So, why would you want to consider JDBC? Several reasons:

- Familiar clustering and replication models using traditional RDBMS utilities

- Does not share the same deficiencies of KahaDB, such as inefficient indexing and sometimes incomplete checkpoint/cleanups

- Easy transparency, pending messages can be inspected using simple SQL queries

- No-SPOF high availability (HA) with traditional RDBMS failover and clustering mechanisms

Given that LevelDB/Zookeeper has been deprecated, JDBC persistence is now the only reliable, native means of achieving a true, no-SPOF HA solution.

Back to topWhat Is c3p0 Connection Pooling?

c3p0 is a connection pooling library. It' s a robust and mature option offered by Machinery for Change.

The library is distributed under LGPL v2.1, and can also be distributed under the EPLv1.0 if you desire. It has been around for a long time, and tested in many high-performance JDBC enterprise applications. The details and value of connection pooling is well known and documented, so we won’t dive into the details of that in this blog. We’ll just concentrate on using it in tandem with a native JDBC driver in ActiveMQ.

Back to topc3p0 Connection Pooling Example in ActiveMQ

Here's a c3p0 connection pooling example in ActiveMQ.

1. Get c3p0

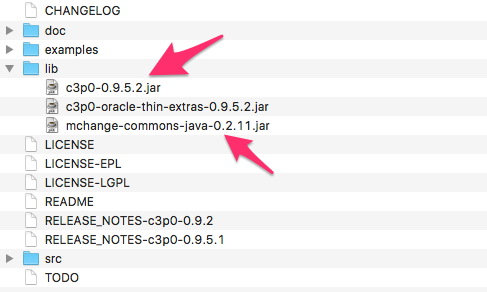

For starters, you’ll need to grab the current version of c3p0 from the product’s SourceForge page. When you download and extract the .zip, you’ll see two files in the /lib directory which will need to be copied into the lib directory of your ActiveMQ installation:

These two files are c3p0-[version].jar, and its sole dependency, mchange-commons-java-[version].jar.

Place these two .jar files in the lib directory of ActiveMQ, or make those files available to the program’s classpath if you are using an embedded instance.

Since we’re using JDBC, if you haven’t already copied the .jar distribution of your database of choice’s driver into ActiveMQ’s lib directory, do that as well. For this blog, I’m using PostgreSQL, so I’ve copied the PostgreSQL driver:

Once you have all your dependencies in place, you’ll need to configure your c3p0 DataSource in activemq.xml. For Postgres, the configuration will look something like:

<bean id="postgres-ds" class="com.mchange.v2.c3p0.ComboPooledDataSource" destroy-method="close">

<property name="driverClass" value="org.postgresql.Driver" />

<property name="jdbcUrl" value="jdbc:postgresql://localhost:5432/activemq" />

<property name="user" value="activemq" />

<property name="password" value="activemq" />

<property name="acquireIncrement" value="1" />

<property name="minPoolSize" value="20" />

<property name="maxPoolSize" value="100" />

<property name="numHelperThreads" value="10" />

<property name="testConnectionOnCheckin" value="true"/>

<property name="idleConnectionTestPeriod" value="100" />

<property name="maxIdleTimeExcessConnections" value="80"/>

<property name="automaticTestTable" value="contest" />

</bean>In real life, your JDBC URL references the actual IP of your database server rather than “localhost.” If you are using a different database than Postgres, you will have different values for driverClass and jdbcUrl. For instance, for Microsoft SQL Server, your driverClass will be com.microsoft.sqlserver.jdbc.SQLServerDriver and your JDBC URL will be along the lines of: jdbc:sqlserver://localhost:1433;databaseName=activemq;user=activemq;password=activemq. Consult your JDBC driver’s documentation for more information on proper usage here.

Once that has been configured, you’ll just need to change your persistence adapter configuration to use the newly created c3p0 datasource with something like:

<persistenceAdapter>

<jdbcPersistenceAdapter dataSource="#postgres-ds" />

</persistenceAdapter>2. Configure the Database

Your database will need a little bit of pre-configuration before ActiveMQ can utilize it. In general, you just need a new database, in this case we’re calling it “activemq”, and a user who has full permissions on that database, also called “activemq” in our example.

Since we’re using Postgres, I’ve also configured my Postgres instance to allow a connection to the database in pg_hba.conf. Please consult your database documentation for information on how to set this up.

3. Test It Out

That’s it! If everything has been configured correctly, when you fire up your ActiveMQ instance, ActiveMQ will connect to your database and automatically create your schema. Note that the JDBC persistence adapter automatically creates a locking table for shared master/slave, so you can use this same configuration on multiple ActiveMQ instances to create active/passive pairs for high availability. You’ll want to configure a lease-locking mechanism for the best reliability.

Once the schema has been created, ActiveMQ will by default still try to create this schema every time the broker starts, leading to some nasty (but entirely benign) warning messages. If you’d like to, you can add the createTablesOnStartup=”false” flag to your JDBC persistence adapter configuration to get rid of these warnings. Just know that if you point the broker to a new database, the schema will not be created for you automatically:

<persistenceAdapter>

<jdbcPersistenceAdapter dataSource="#postgres-ds" createTablesOnStartup=”false” />

</persistenceAdapter>4. Use Throughput Improvements

We are using a simple load procedure for these tests, built with Camel using its DataSet component. It sends 100,000 messages through with no producer delay. The messages are produced, consumed, produced to another destination, and then consumed and dumped to log:

<route>

<description>Camel Load Test</description>

<from uri="dataset:myDataSet?produceDelay=-1"/>

<setBody><constant>test c3p0 load</constant></setBody>

<to uri="activemq:loadQueue1"/>

</route>

<route>

<from uri="activemq:loadQueue1"/>

<to uri="activemq:loadQueue2" />

</route>

<route>

<from uri="activemq:loadQueue2" />

<to uri="log:myLog?level=DEBUG" />

</route>All load testing was done without network transport and to a local database, so that we can eliminate as many outside factors as possible.

Let's compare the throughput of a non-pooling JDBC connection versus using c3p0.

Using a non-pooling JDBC connection through Postgres

This JDBC configuration looks like:

<bean id="postgres-ds" class="org.springframework.jdbc.datasource.DriverManagerDataSource">

<property name="driverClassName" value="org.postgresql.Driver"/>

<property name="url" value="jdbc:postgresql://localhost:5432/activemq" />

<property name="username" value="activemq"/>

<property name="password" value="activemq"/>

</bean>Here are the results of that test:

The blue line represents the number of messages per-second for each set of 1000 messages, and the orange line represents the moving average. After 100,000 messages of throughput, we ended up at an average of 94.98 messages per second.

Not what we’d call great throughput, especially for anyone used to a non-RDBMS persistence store.

Using the configuration demonstrated earlier, we get:

Wow! Much faster. We have an average of 277.25 messages per second here, a 291.9% improvement over our non-pooled connection.

Back to topOther Connection Pooling Options

Alternative: PGPool

As a side note, some databases, like Postgres, come with their own native pooling and don’t require a third-party pooling library like c3p0. This won’t always be the case (we’re looking at you, MS SQL Server), so for databases which don’t have their own native pooling, c3p0 is an excellent choice. However, you’ll find that native pooling performs very similarly.

Check out our load test results using Postgres’s native PGPoolingDataSource class, configured as such:

<bean id="postgres-ds" class="org.postgresql.ds.PGPoolingDataSource" destroy-method="close">

<property name="serverName" value="localhost"/>

<property name="databaseName" value="activemq"/>

<property name="portNumber" value="0"/>

<property name="user" value="activemq"/>

<property name="password" value="activemq"/>

<property name="dataSourceName" value="postgres"/>

<property name="initialConnections" value="1"/>

<property name="maxConnections" value="10"/>

</bean>Here are the results, using the same load test mechanism:

As you can see, these are very close to the c3p0 results, closing with an average of 277.79 messages per second after processing 100,000 messages.

Reality check

Well, we can certainly improve upon the native JDBC persistence by making use of connection pooling. Now for the bad news. I hate to burst your bubble, but, check out how the native KahaDB implementation (with no optimization) compares:

An unfair comparison, for sure. As mentioned, a NoSQL-style database is always going to better at this sort of thing than RDBMS. In this case, we end up with an average of 418.85 messages per second after 100,000 messages, a 150.77% increase. Given the limitations of KahaDB and the deprecation of LevelDB, however, you may not have any choice but to use the JDBC persistence.

So, the top line, KahaDB, is the clear winner. We already knew that, but again, KahaDB may not be acceptable for many users. The two middle lines are our c3p0 and PGPool solutions, and we can see that by the time we get to 100,000 messages, the results are nearly identical. So, either of these are excellent ways to improve your throughput if you need to use JDBC. Our bottom line is a non-pooled JDBC solution, and, as we now know, we can improve upon this configuration dramatically by adding connection pooling to our configuration.

Back to topGet More Out of ActiveMQ

ActiveMQ is a great tool for messaging. But to get the most out of ActiveMQ, you'll need the help of experts.

The OpenLogic team is here to help. We provide around-the-clock access to Tier 3/4 open source architects ready to support, consult, and educate your team to solve issues across your entire software stack and development lifecycle.

See for yourself how ActiveMQ support, training, and services will help your business.